Currently studying a grad level Statistical Inference course. I'd just like some clarification regarding how to obtain the Rao-Cramer Lower Bound for a statistic.

So far, I understand that you obtain the RCLB by deriving the Fisher Information of an estimator. My question is:

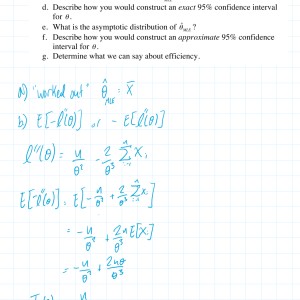

Is the RCLB always the inverse of the Fisher Information? I'd like to know if my approach was correct. But more so, I'd like to know if I can always just take the inverse of the Fisher Information and call it the RCLB. Clarification and exlanation of any key facts that I might be missing would be greatly appreciated. Here is an example I'm working on at the moment. part b of this queastion posted. It's Thank you!

PS: I don't need any of the solutions worked out. Just some calrification on this concept. Thank you!

Cactus90

Cactus90

Answer

It's tricky to answer open ended questions instead of concrete problems, but I think I can answer your concrete questions and provide a clear picture.

Let's agree on what RCLB says. It says that for an unbiased estimator, the variance of said estimator will always be at least the inverse of the Fisher information.

Now, let's look at your questions.

"Is the RCLB always the inverse of the Fisher Information?"

The bound is stated in terms of the inverse of the fisher information. So yes.

But be careful, because this doesn't mean that you will always find an unbiased estimator that attains said bound. In some situations, the best unbiased estimator (one with minimum variance) will have a variance still greater than the inverse of the Fisher information. In other situations there isn't a best unbiased estimator. One can always find estimators with ever decreasing variance (but always larger than the inverse of the Fisher information).

"I'd like to know if my approach was correct. But more so, I'd like to know if I can always just take the inverse of the Fisher Information and call it the RCLB" .

The inverse of the information matrix is the RCLB. That's the theorem.

The theorem works under some mild technical conditions on the density function. Mainly, derivative of the log density with respect to the parameter in question must exist, said derivative must be integrable with respect to the density (x variable), and the integration and derivative process must be interchangeable. To compute the Fisher information using a second derivative, one needs the same as above but with the second derivative instead of just the first.

Your main/real question then is how to compute Fisher information in a guaranteed way. For virtually all problems and real life applications, the method of the second derivative is used and gives the right answers.

It is technically possible to come up with situations where the Fisher information can't be computed or when the RCLB doesn't hold. But this is more of a mathematical curiosity with little interest in the context of Statistics. It would be difficult to find an interesting example where things don't work out (because one of the mild technical conditions doesn't hold)

Mathe

Mathe

-

Rage, this was exactly what I needed to be informed on to boot! This puts all the important info on my notes regarding RCLB into one so I highly appreciate it. Thank you! I hope you have a good day!

- answered

- 3528 views

- $8.00

Related Questions

- How do you calculate per 1,000? And how do you compensate for additional variables?

- Variance of Autoregressive models, AR(1)

- In immediate need of getting a statistics paper done!!

- stats- data analysis

- Probabilities

- Sample size calculation for a cross sectional healthcare study

- Classification problem using one or more Simple Linear Perceptron

- Quantitative reasoning

Did my file post correctly? It was supposed to be a PNG image file.

Yes, I can view the file.

Thank you :)